Drishya is a smart glasses designed for visually impaired people. it focuses on assisting them in their day to day activities, education and also employment.

Creating a pair of smart glasses like the Drishya smart glasses involves a combination of hardware and software engineering. Below is a step-by-step guide on how to build smart glasses, from concept to execution.

The smart glasses designed for visually impaired individual aim to empower them by facilitating easy access to text through image scanning and conversion to audio, aiding in education, daily tasks, and communication. U

Purpose and Features:

- The purpose of making Drishya smart glasses was to provide visually impaired people with increased assistance and independence to lead a life where they have access to all educational materials an ease in employment and helps them in doing their day to day activities by themselves.

- In order to make the above possible we have integrated the Drishya smart glasses with features like voice assistance, obstacle detection, object recognition, OCR (i.e reading the texts written), face recognition, Navigation and AI assistance and personalization.

Prototype Design:

- We have designed these glasses using Fusion 3d and have 3d printed them using PETG material.

- We have assembled the parts and made it into a prototype that can be easily used.

Component Selection

Sensors and Electronics:

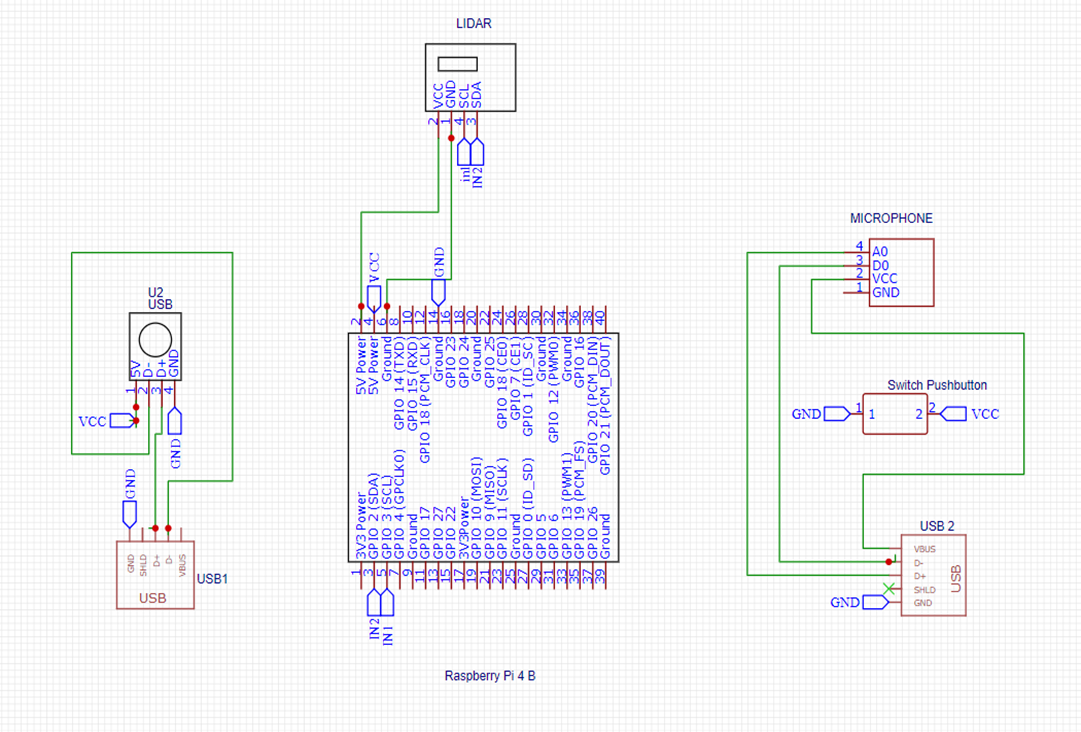

- We have integrated sensors and components like lidar sensors, camera module, processors, bluetooth integration, buttons, microphone, speakers.

1. Camera:

● A high-resolution camera is attached to capture clear images of text documents

and the surrounding environment.

● The camera should be integrated into the smart glasses frame for convenience

and portability.

2. Lidar Sensor:

● The lidar sensor is needed to measure the distance between the user and nearby

objects.

● This sensor helps in detecting obstacles and providing spatial awareness to the

user.

3. RaspberryPi Module:

● The smart glasses system is powered by a Raspberry Pi module

● The RaspberryPi module serves as the central computing unit for image

processing, text recognition, and audio conversion.

4. Earphones:

● Earphones are required to deliver the converted audio output to the user.

● The smart glasses should support the connection of earphones through a wired

interface.

● The power source should provide sufficient runtime to support continuous

usage throughout the day.

5. Audio Output Device:

● Apart from earphones, the smart glasses is include with audio output device for

delivering alerts and notifications to the user.

The smart glasses is housed within a durable and lightweight frame. This frame is comfortable to wear for extended periods and should securely hold

all the components in place.

Hardware Development

Assemble Components:

- We have assembled all the hardware components keeping it lightweight for easy use.

- The circuit diagram is given below

1 Functional Requirements

Text Recognition and Audio Conversion:

The system must be able to capture images of text using a camera.

It should utilize technologies like OpenCV and OCR to detect and extract text

from the captured images.

The extracted text should be converted into audio format using text-to-speech

(TTS) technology.

Users should be able to listen to the converted audio text through earphones

connected to the smart glasses.

Object Detection:

● Utilize an lidar sensor to measure the distance between objects and the user.

● Integrate the sensor with the webcam to ensure clear image capture of text

documents.

● Provide real-time alerts to the user regarding obstacles or objects in their path

through audio feedback.

Navigation Assistance:

● Implement a mode for navigation assistance, guiding usersthrough environments

with minimal obstacles.

● Utilize image processing techniques to identify and guide users towards doors,

pathways, or other navigational landmarks.

● Provide audio instructions to users based on the detected environment, helping

them navigate safely.

Compatibility and Integration:

● Ensure compatibility with Raspberry Pi as the primary computing platform.

● Integrate all components (camera, sensors, RaspberryPi) seamlessly to facilitate

smooth operation of the smart glasses.

User Interaction and Control:

● Enable user interaction with the system through voice commands or other

accessible input methods.

● Implement controls for adjusting volume, switching modes, and initiating

specific actions like text capture or translation.

Data Modelling: E-R Diagram

.png)

Functional Modelling

.png)

For all the functions of the smart glasses to work properly and effectively firstly we have researched about all the components and assembled them and then programmed them to check their efficiency.

.png)

After testing and getting the desired results we proceeded to make it more compact and used much compact components which will help in making the glasses easily wearable and efficient.

We used Fusion 3d to design the glasses and printed them with PLA material to

So the next prototype is given below

.png)

After testing it on few people and getting their feedback we improved the design of the and changed the material to PETG as it makes it more flexible and sturdy.

.png)

This is the final Drishya Smart glasses. We have attached a band to it as it will be easy to wear and adjustable and the glasses won't fall.

We have used codes for voice assistant , face recognition , obstacle detection , Sos calling, messaging etc .

The code for voice assistant is given below and the rest codes are attached in the codes section.

import speech_recognition as sr

import wikipedia

from gtts import gTTS

import os

from datetime import datetime

import requests

from bs4 import BeautifulSoup

import pywhatkit

import wikipedia

import sys

import pyjokes

from twilio.rest import Client

now = datetime.now()

def speak(b):

tts = gTTS(text=b, lang='en')

tts.save("audio.mp3")

os.system("mpg321 audio.mp3")

#for news update

def news():

main_url= 'http://newsapi.org/v2/top-headlines?sources=techcrunch&apiKey=3ae4390e85474194bcd4255e144ffa1c'

main_page = requests.get(main_url).json()

articles = main_page["articles"]

head = []

day = ["first","second","third","fourth","fifth"]

for ar in articles:

head.append(ar["title"])

for i in range (len(day)):

speak(f"today's {day[i]} news is: {head[i]}")

def speech_to_text():

required=-1

for index, name in enumerate(sr.Microphone.list_microphone_names()):

if "pulse" in name:

required= index

r = sr.Recognizer()

with sr.Microphone(device_index=required) as source:

r.adjust_for_ambient_noise(source)

print("Say something!")

audio = r.listen(source, phrase_time_limit=4)

try:

input = r.recognize_google(audio)

# print("You said: " + input)

return str(input)

except sr.UnknownValueError:

print("Google Speech Recognition could not understand audio")

except sr.RequestError as e:

print("Could not request results from Google Speech Recognition service; {0}".format(e))

while True:

speak("drishya here")

a=str(speech_to_text())

print(a)

if a=="None":

print(' ')

elif "date" in a:

k=now.strftime("Today's date is %A, %B %d, %Y")

speak(k)

elif "time" in a:

time=now.strftime(' %I: %M %p ')

speak('time is' + time)

elif a=="who are you":

speak("I am Drishya, your assistant")

elif a=="what can you do":

speak("I can do lot of things, for example you can ask me time, date, weather,Location. I can send emergency messages, make a call,and many more tasks.")

elif a=="how are you":

speak("I am fine , how can I help you sir")

elif a=="thank you":

speak("It's my pleasure sir, always ready to help you sir")

#elif a=="where we are":

#speak("we are at Atharva college of engineering, Mumbai, Maharashtra")

elif "temperature" in a:

search = "temperature in mumbai"

url = f"https://www.google.com/search?q={search}"

r = requests.get(url)

data = BeautifulSoup(r.text,"html.parser")

temp = data.find("div",class_="BNeawe").text

speak(f"current {search} is {temp}")

elif a== "tell me a joke" :

joke = pyjokes.get_joke()

speak(joke)

elif "news" in a:

speak("please wait sir, fetching the latest news")

news()

elif a=="you can sleep":

speak("thanks for using me sir, you can call me anytime.")

sys.exit()

elif "location" in a:

speak("wait sir, let me check")

speak("We are at Bharatiya Vidya Bhavan's Sardar Patel Institute of Technology (S.P.I.T), Mumbai, Maharashtra")

elif a== 'send emergency message' :

account_sid = 'AC97212b337621c954299613bc01a35cac'

auth_token = 'c972876c2dcae69cb871b43033bc1a79'

client = Client(account_sid, auth_token)

message = client.messages.create(body='I am Drishya, need urgent help.Please reach me out',

from_='+13204387919',

to='+919321633893'

)

print(message.sid)

speak("Message has been sent sir, waiting for your next command")

elif "call" in a :

account_sid = 'AC97212b337621c954299613bc01a35cac'

auth_token = 'c972876c2dcae69cb871b43033bc1a79'

client = Client(account_sid, auth_token)

message = client.calls \

.create(

twiml='<Response><Say>Call from Drishya, need help.</Say></Response>',

from_='+13204387919',

to='+919321633893'

)

speak("Phone call has been made sir, waiting for your next command")

else:

result=wikipedia.summary(a, sentences=1)

speak(result)

The video for Drishya Smart Glasses is attached below

https://drive.google.com/file/d/1x4kDMRBdOP2CQnGHT2v7vtx9ccPevtDY/view?usp=sharing