STUDY OF EXISTING ATTENDANCE SYSTEMS

Every organization/institution requires a "robust" and "stable" system to record the attendance of their corresponding students/employees. Traditionally there are some different kinds of smart attendance system innovated, such as "Fingerprint" based type, "RFID card" based type, "QR" based type, "voice recognition" based type, "iris" based type, etc... If we look at the "Fingerprint" based attendance system there might be a chance of proxy attendance as an individual's attendance can be faked with the help of a particular individual's fingerprint. When it comes to RFID card based attendance system, each student/employee will be assigned a card corresponding to their identity, the flaws in this system are there might be a chance of card loss and the unauthorized person may misuse the card for fake attendance, the same kind of flaws will be noticed in the "QR" based attendance system coming to "voice recognition" bases attendance system, the accuracy is not up to the mark the reasons are if the voice of the individual changes due to common flu or some other reason, he/she may not receive his/her attendance thereby causing inconvenience and there is also a chance of proxy attendance, by using their recorded voice. In "Iris”, based attendance system iris scanners are higher in cost compared to other modalities such as fingerprint scanner the cost of iris scanners are nearly five times the cost of fingerprint sensors. As "Iris" scanners are not economic, small institutions/agencies that cannot afford proper setup should be employed for an "iris" recognition process. A person has to be steady in front of the device, as there is difficulty in recognizing the iris of an individual in the presence of any sort of movement. There are some sort of flaws in each of the attendance system discussed above, there is a hindrance in achieving accuracy." Face recognition" based attendance system is a fitting reply to all those flaws which we have discussed above, it overcomes all those flaws and an ideal one in the sector of smart attendance system. Face recognition-based attendance system brings modernization and innovation in the attendance system; it is built in such a way that it nearly eliminates all chances of proxy attendance. It is economical compared to "Iris" based type and unlike "Iris" based type; "Face recognition" based attendance system scans and recognizes an individual regardless of their movements. The attendance can be carried out within seconds, thereby fulfilling the criteria of time management. As of now "Face recognition” based attendance system is one of the finest of the smart attendance system.

METHODOLOGY:

Hardware Used:

- Nvidia Jetson Nano 2GB developer Kit

2. TVS Webcam WC 103

Workflow:

Workflow comprises of three stages, they are 1) Face and eye detection, 2) Training of dataset and 3) Face and eye recognition and marking attendance in web server

Face Detection:

Face detection involves categorizing image windows into two types: those containing faces and those without (clutter). The first stage is a classification task that accepts an unknown image as input and produces a binary value indicating whether the picture contains any faces. The second phase is the face-positioning task, which identifies the position of any face or faces within the image, outputting their location as bounding boxes (x, y, width, height). After capturing the photo, the system analyzes the images in its database for matches and returns the most appropriate results.

We will use the "Haar-like" technique to detect faces from real-time video. This technique is used for feature selection or feature extraction, identifying eyes, noses, mouths, and other objects using edge detection, line detection, and centered detection. The process involves selecting important characteristics in an image and extracting them for face detection. The next step is to enter the x, y, w, and h coordinates to create a rectangular box around the face(s) (our region of interest) in the image. When the face location is identified, the image is saved for training data. This detection technique is also used in the recognition process. Additionally, eye detection is incorporated to enhance the accuracy and reliability of face detection and recognition..jpeg)

Training the Dataset (face images):

For training data, we will need a dataset including the face photos of the student we are trying to identify. For each image, we must also provide an ID and a Student Name, so that the algorithm can recognize an input image and provide you with a result. All images of the same student must have the same ID. All those images are kept in a folder .The structure is shown below:

Images to be trained

- | --- > Person1.1.jpg

- | --- > Person1.2.jpg

- |--- > Person1.3.jpg

- | --- > Person1.4.jpg

- |

- | --- > Person2.1.jpg

- | --- > Person2.2.jpg

- | --- > Person2.3.jpg

- | --- > Person2.4.jpg

After the creation of dataset, we need to do the steps using the LBPH computation in order to obtain a training model file. The first stage in the LBPH method is to build a binary picture that better represents the original image by taking into account the face features. To do so, the method employs a sliding window idea based on the radius and neighbors parameters.

This method is depicted in the figure below:

.jpg)

From the above figure, we can observe that image taken is in grayscale. From the grayscale image, we take a portion i.e., 3X3 pixels of that image. The 3X3 pixel can also be expressed as a matrix holding each pixel's intensity as shown in the above figure. Now, the center pixel value is taken, as a threshold (169 in the above figure) and calculating the binary values of neighboring pixels by comparing these pixel intensities with the center pixel intensity. If the pixel intensity value is more than the threshold value (center pixel value) then a binary “1” is assigned else “0” will be assigned. Each binary value from each matrix point must be translated into a new binary value (ignoring the center pixel value). Now, the binary value is converted to decimal and it is replaced with the center pixel value. For, all 3X3 grids, we apply this to get an LBP image. We have that new image(LBP image) that better captures the properties of the original image as a result of the foregoing technique (LBP procedure).

Refer : https://www.geeksforgeeks.org/create-local-binary-pattern-of-an-image-using-opencv-python/

Now, we partition the LBP image into numerous grids, so that we can process each grid separately. For a grayscale image, each histogram (from each grid) will only have 256 places (0 to 255) reflecting the occurrences of each pixel intensity. Then, we must concatenate each histogram to form a new, larger histogram. For example, if we use 10x10 grids, the final histogram will contain 10x10x256=25600 places. The resulting histogram displays the image's original properties. Now, the histograms for each person is calculated and saved in a single file names as “Trainner.yml”(.yml is the file extension for the trained model).

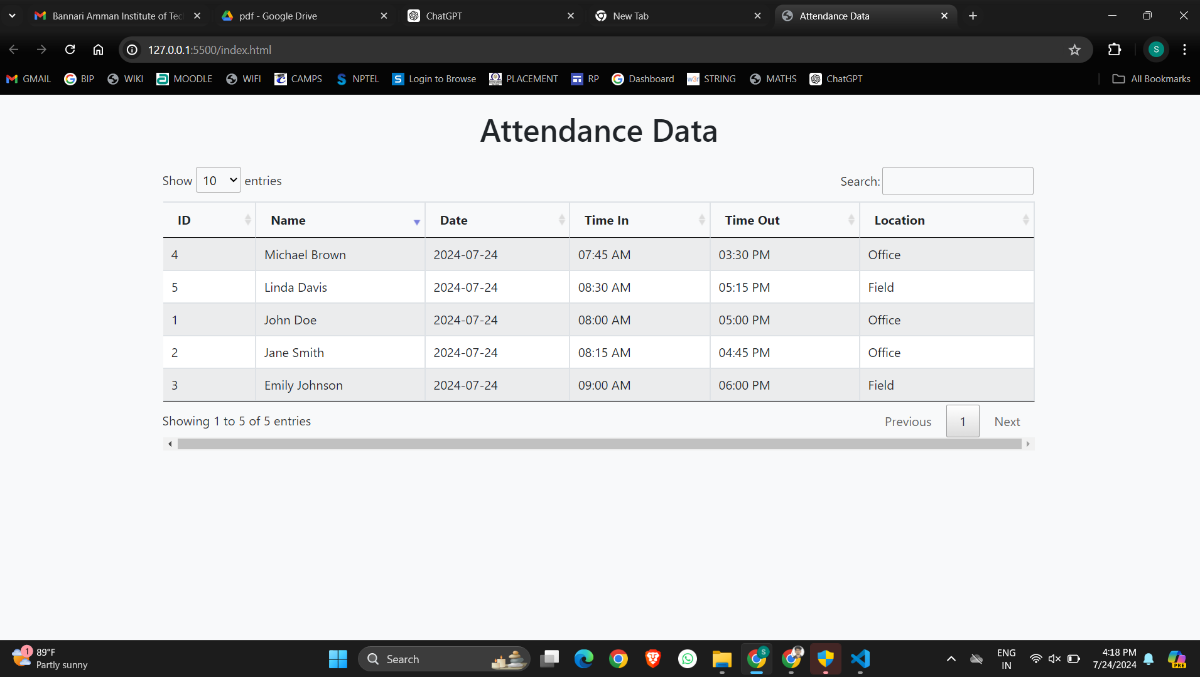

Face recognition for marking attendance is achieved by capturing real-time images using an external camera. The system first detects faces using the Haar-like feature detection method, then applies the Local Binary Patterns (LBP) algorithm to these detected faces. For each identified face, a set of histograms is created, which is then compared to the histograms in a pre-trained model stored in "Trainer.yml". This comparison is made using Euclidean distance to find the closest matching histogram, indicating the recognized face. The face recognition algorithm returns the label of the image with the closest histogram along with a confidence level, which measures the similarity. A lower confidence level indicates a more accurate match. If the confidence level is below a predefined threshold, the face recognition is deemed successful. The recognized individual's attendance is then recorded in an Excel file, "Attendance_{date}.xlsx", which includes their ID, name, date, and time of recognition. This attendance data is also displayed on a web server set up using a framework like Flask or Django. The web server reads the Excel file, extracts the attendance information, and dynamically displays it on a web page, ensuring real-time access and monitoring of attendance records.

Webserver:

Benefit of the project:

- Time-Saving For Your Workforce

- Increased Efficiency and Capability

- Enhances Workplace Security

- Improved Employee Wellness And Productivity

- Automated Time Tracking

- Easy Integration With Other Systems

- Easy to manage the record