video demo the project

1)

With the rapid development of science and technology, particularly artificial intelligence, laboratories are evolving into smart labs integrated with modern technologies and automation. Smart labs, such as the system at MET Institute in India, demonstrate the advantages of connecting devices via the Internet, remote monitoring and control, and automated environmental conditions. This system not only optimizes energy use and improves working conditions but also enhances security through facial recognition technology, replacing easily hackable fingerprint scanners. Similarly, in Vietnam, the VinAI Research Institute has applied highly-rated facial recognition technology for managing personnel access and has integrated the virtual assistant Vivi with voice recognition and natural language processing capabilities to assist users.

However, despite these advancements, current labs still face significant limitations. Manual and loosely managed operations are still common, and many facilities remain rudimentary and fail to meet the demands of modern research and education. The shortcomings in managing facilities lead to issues such as equipment loss and shortages, hindering research processes.

Therefore, implementing a more advanced smart lab system becomes a pressing need. The project "AI humandoid robot for management and security" aims to address this need by applying deep learning algorithms to enhance the automatic, rapid, and accurate monitoring and management of personnel, equipment, and facilities. By incorporating deep learning, the system will not only improve the accuracy of facial and voice recognition but also enhance error detection, predict maintenance needs, and optimize research processes, thereby increasing efficiency and safety in laboratory operations.

2)

To address the issues mentioned, we are developing a smart lab system focusing on personnel management. To overcome the limitations of traditional manual management methods, we are creating an automated system capable of recognizing user identities and permissions, storing personnel information, and tracking entry and exit times to meet human management requirements.

To tackle the rigidity, inefficiency, and lack of transparency of old methods, we are designing a user-friendly system that interacts with users through various interfaces, such as screens, voice, and gestures. Additionally, the system will be capable of storing and extracting user information for easy monitoring and evaluation.

The recognition system needs to be highly accurate and operate in real-time, with the ability to retrieve user information in real-time.

Target and Scope of Research

Target

- Effective algorithms and models for identity detection and recognition, facial recognition, and mask detection.

- Databases related to facial recognition and masked faces.

Scope of Research

- Signal processing, image processing, machine learning techniques, and deep learning techniques.

- Software technologies, web programming technologies, and data storage technologies.

Approach and Research Methods

Approach

- Research methods and system development, including data storage and management.

- Build upon foundational knowledge in: recognition techniques, machine learning techniques, data analysis, system design and implementation (hardware and software), database collection and creation, testing and improvement.

Research Methods

- Review relevant literature on facial recognition systems and models to compare results obtained from different models.

- Study the problem of recognizing individuals wearing masks.

- Develop a database and database management system to store this data.

- Design a website using ReactJS for administrators and students.

- Test and evaluate the system during the installation and trial phases.

3)

System block diagram

.jpeg)

.jpeg)

.jpeg)

The rotational mechanism is achieved using two servos located in the neck area to track and adjust the camera to the center of the user's face. The rotation angle is calculated based on a face-tracking algorithm.

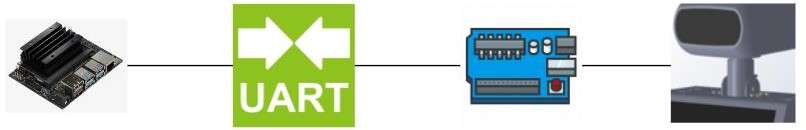

The Jetson Nano calculates the desired rotation angle and communicates this information to the Arduino via UART. The Arduino receives and decodes the data string and then controls the servos to rotate to the calculated angle.

Face recognition algorithm diagram

.jpeg)

· Cameras capture images of the face, which are then converted into digital data.

· The digital data is processed by algorithms to detect the position of the face and extract facial features.

· The extracted features are compared with the facial data stored in the database to return the identification result.

+ face detection: The MTCNN (Multi-task Cascaded Convolutional Networks) algorithm includes three cascaded CNN networks: P-Net (Proposal Network), R-Net (Refine Network), and O-Net (Output Network). For each input image, it generates multiple scaled versions of the image (pyramid image).

.jpeg)

P-Net (Proposal Network)

.jpeg)

R-Net (Refine Network)

.jpeg)

O-Net (OutputNetwork)

During the training process, we use loss functions for optimization:

- Classification of face vs. non-face in images: The cross-entropy loss function is used for this task.

For each value xix_ixi in the training dataset, the loss function is computed as follows:

Li = -( yi log( pi )+ (1- yi )(1- log( pi )))

For pi is the value generated by the network indicating the probability that y1=1 is a binary label with y1 ∈ {0,1} ( 0 for non-face and 1 for face)

.jpeg)

Results of Using the MTCNN Algorithm for Face Detection

Top of Form

Bottom of Form

Face Alignment Problem

After applying the MTCNN model, the system will help us detect 5 key points on the face. Among these, there are 2 points corresponding to the eyes. We use these two points to plot on the Oxy coordinate axis. We then calculate the angle between these two points relative to the Ox axis and rotate the image by the inverse of this angle to align the face properly.

.png)

Results After Performing Face Alignma

Face Tracking Algorithm

- First, use the face detection model to identify the face and obtain the bounding box. Determine the center of the bounding box.

- Define the origin OOO as the center of the image. Convert the coordinate system in OpenCV to the Oxy coordinate system. Determine the relative position of the bounding box center with respect to the origin OOO.

- Convert the Oxy coordinate system to the servo angle coordinate system to obtain the desired servo rotation angle, which is then sent to the rotational mechanism.

Face Anti – Spoofing method using infrared Camera

In this problem, we use the infrared camera IMX219-160IR designed for the NVIDIA Jetson Nano and the RGB camera IMX219-160.

.jpeg)

Introduction to the Mask Classification Problem

The training data only includes one image of each user, which does not feature glasses or a mask. To enhance the diversity of the dataset for model training, this image is then processed through an augmentation module to generate additional cases such as wearing masks, wearing glasses, blurring, increasing brightness, decreasing brightness, and more.