EchoLens smart glasses enhance communication for deaf and mute individuals by converting speech to text and translating sign language into spoken words. The project integrates 3D printing, custom PCBs, and AI-driven sign detection for real-time interaction.

|  |

I. Building Steps:

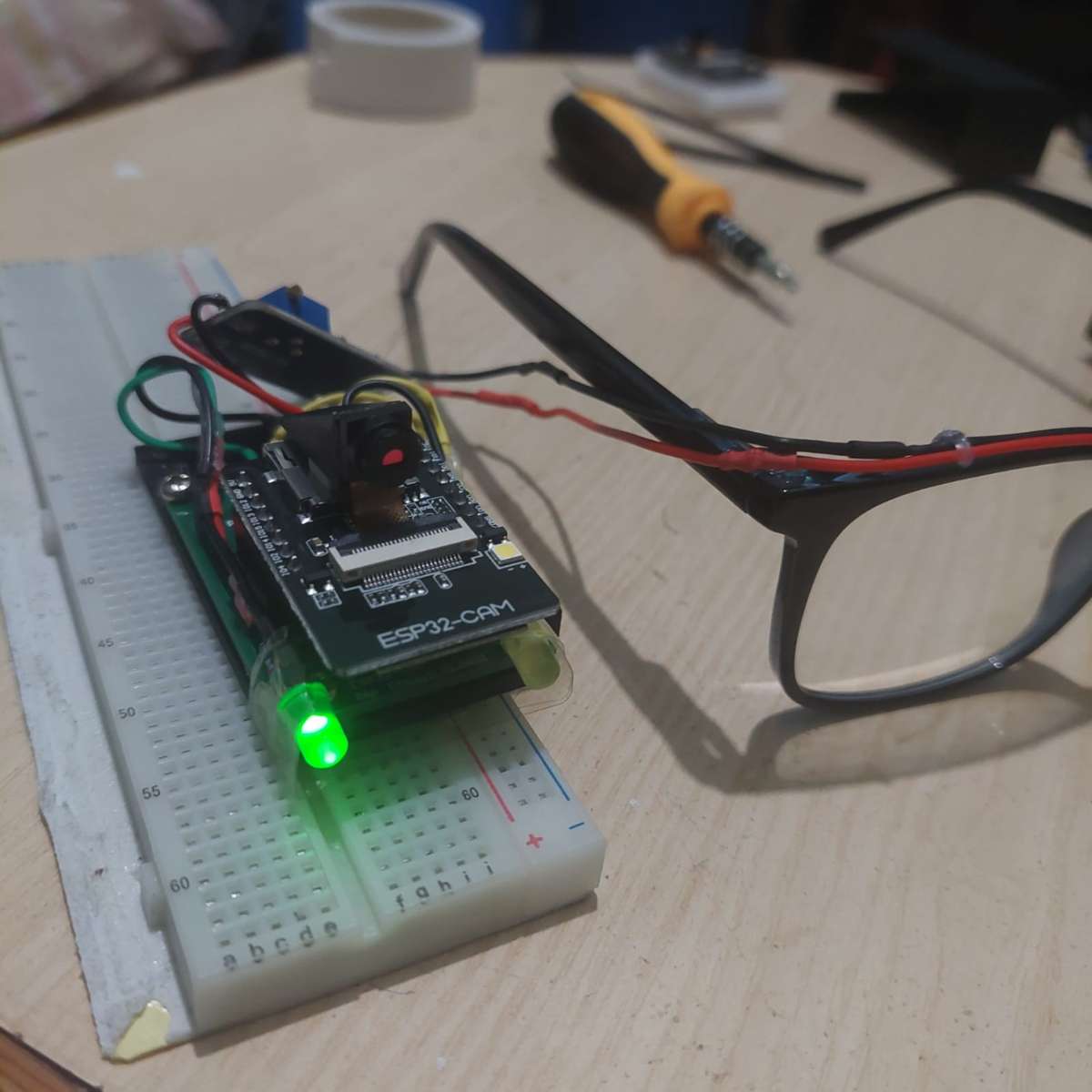

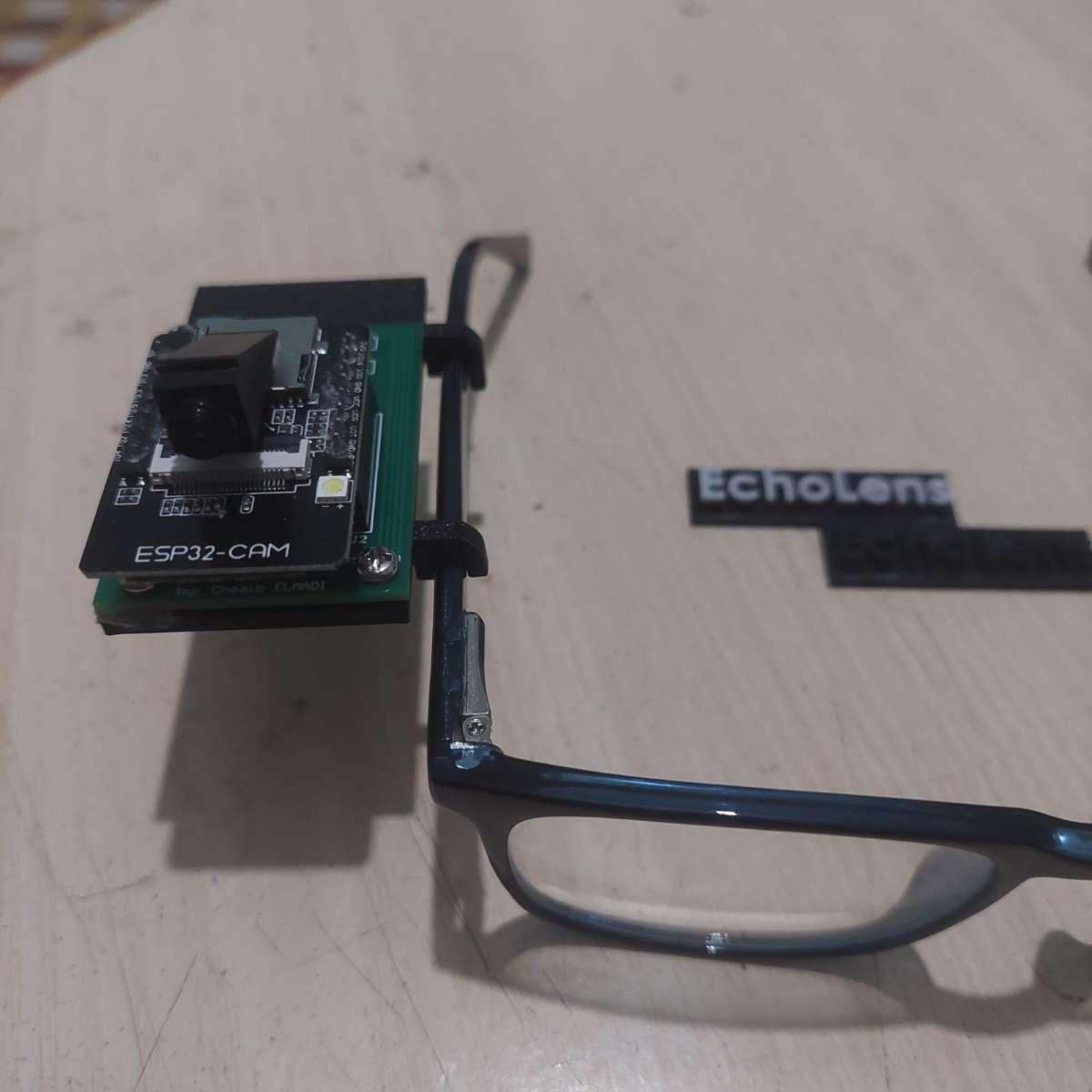

1. Design and 3D Printing of the Frame:

- The design process began with modeling the frame to house the ESP32-CAM on the right and the battery on the left, ensuring optimal arrangement on the glasses.

- Solidworks was used for the frame design, which was then 3D printed to materialize the vision.

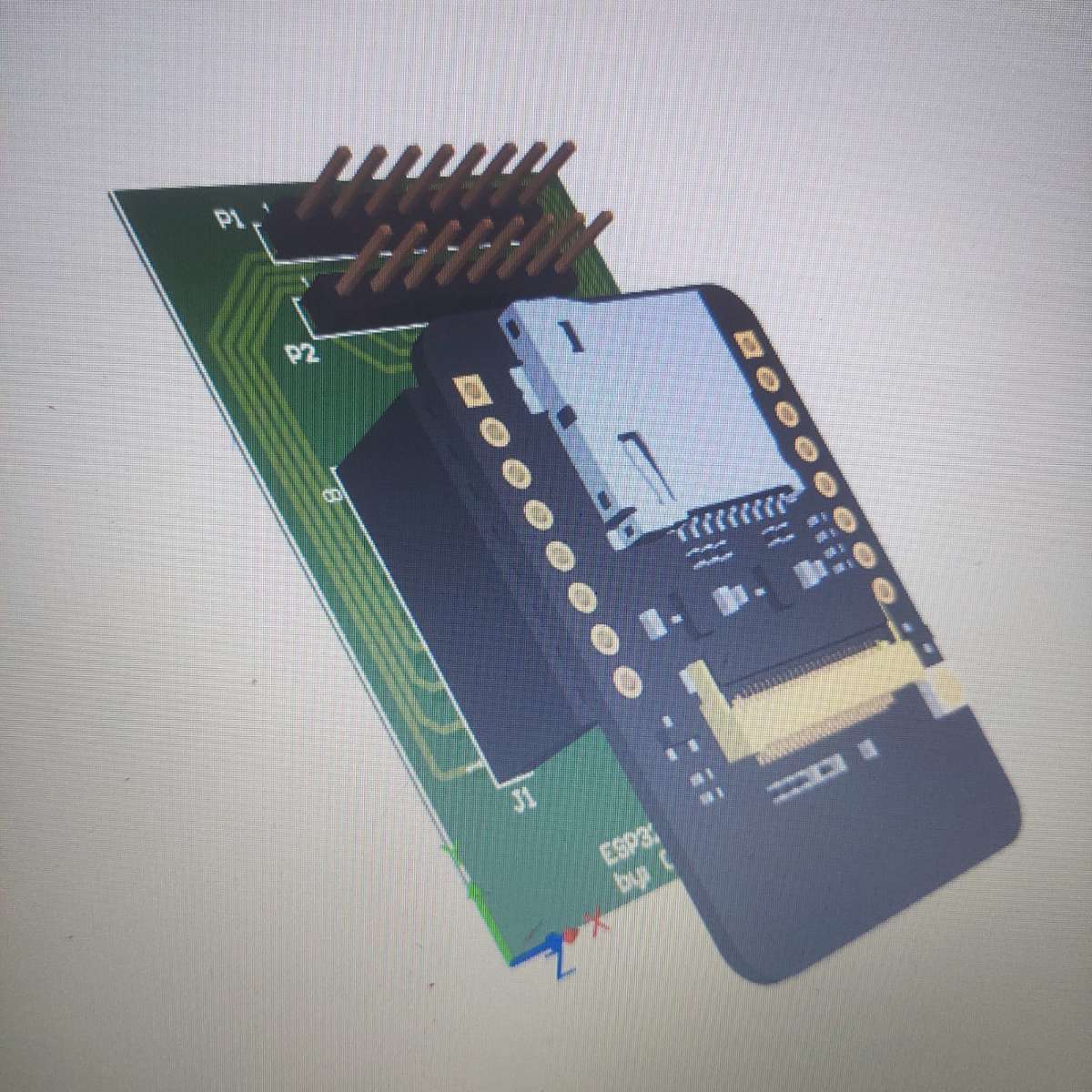

2. Design and Fabrication of the PCB:

- After completing the 3D frame design, a custom PCB was created using Altium Designer to secure the ESP32-CAM and simplify the soldering process.

- The PCB design was sent to PCBway for printing.

|  |

3. AI Model for Sign Detection:

- An AI model was developed to detect 16 sign language signs.

- The model, based on hand points obtained via Mediapipe, utilized a decision tree classifier from Scikit-Learn.

- Data points for each sign were collected and used to train the model.

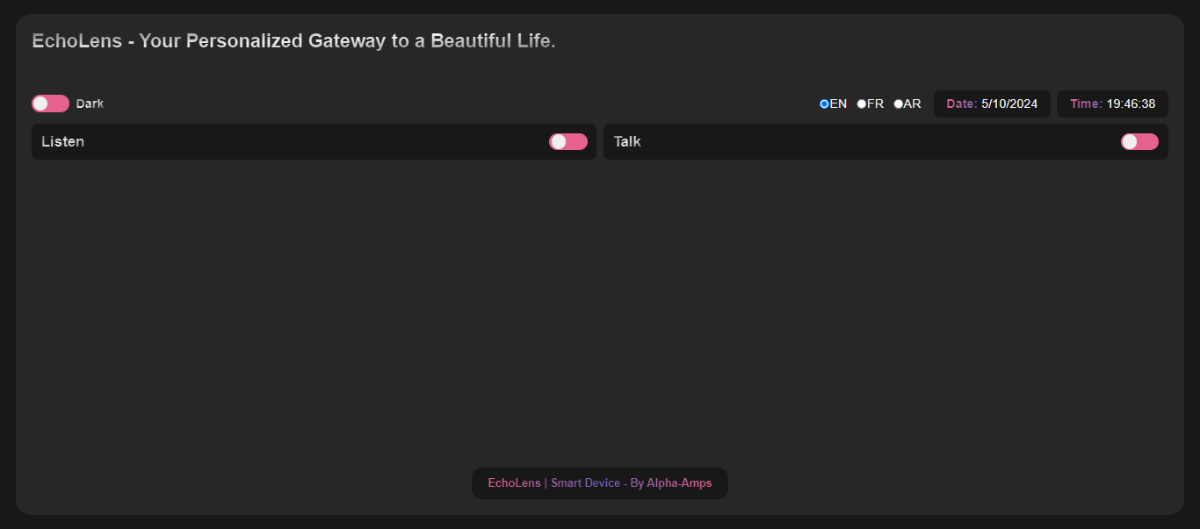

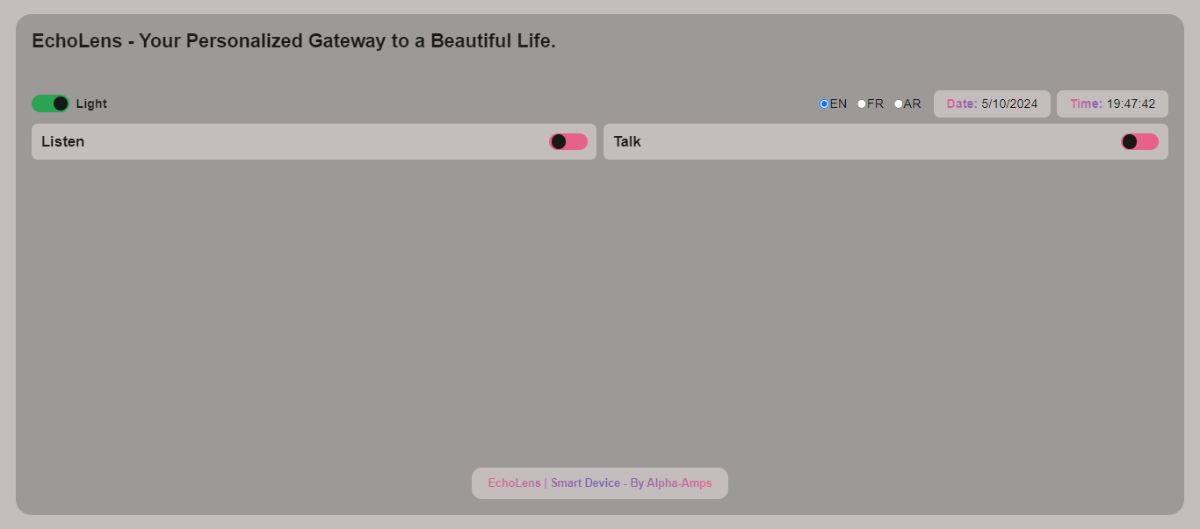

4. Creation of the EchoLens Web Page:

- A web page was set up, rendered by the ESP32-CAM server, to facilitate the use of the smart glasses.

- The page provided two options: "Listening Mode" and "Talking Mode," with detailed demonstrations of how to use these features.

5. Data Exchange:

- The communication between the ESP32-CAM (in CPP), the AI model (in Python), and the web page (in JavaScript) was addressed to ensure seamless data exchange.

Video Demo

https://github.com/Choaib-ELMADI/echolens/blob/main/Media/Demo/Video%20Demo.mp4

II. Users Guide for EchoLens:

- Users could choose between "Listen" and "Talk" modes, with a flashing LED indicating the selected mode.

- In "Listen" mode, EchoLens captured and displayed the interlocutor's speech on the screen.

- In "Talk" mode, users could select their preferred language, and EchoLens would capture and process their signs, sending the detected message to the web page for the other person to hear.

III. Future Objectives:

- Increase the variety of signs in the dataset to enhance the AI's ability to interpret sign language.

- Integrate a transparent display on the glasses for a seamless user experience, eliminating the need for an external web page.

IV. Kindly check this link for more details:

https://github.com/Choaib-ELMADI/echolens