Introduction

Agriculture is an essential industry that faces numerous challenges, including labour shortages, increasing demand for food, and the need for sustainable farming practices. To address these challenges, the integration of advanced technology in farming operations has become increasingly important. One such innovation is the development of a multifunctional agricultural robot. This robot is designed to perform a variety of tasks, including weeding, pollination, and crop health monitoring, thereby automating labour-intensive processes and improving overall farm efficiency.

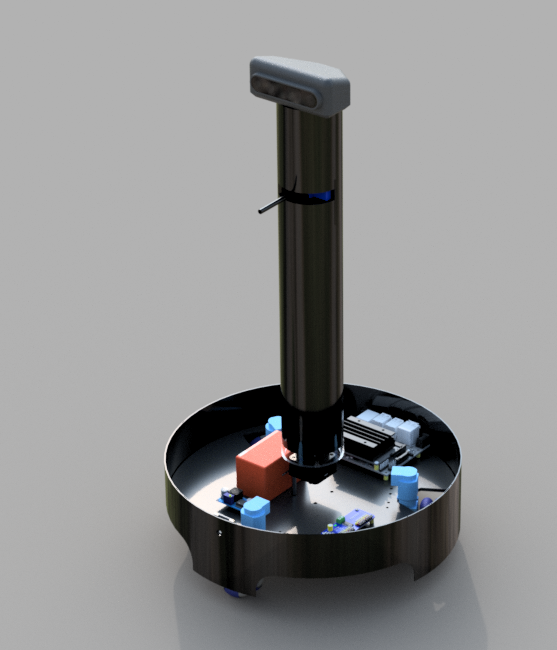

The multifunctional agricultural robot is equipped with state-of-the-art technology, including autonomous navigation, 3D vision systems, and machine learning algorithms. It employs a combination of hardware and software components to navigate through fields, identify and remove weeds, assist in pollination, and monitor the health of crops. The robot's main processor, an NVIDIA Jetson Nano, handles complex computations and processes data from various sensors, including an Intel RealSense camera and an ESP32-CAM module.

One of the key features of the robot is its ability to autonomously navigate through agricultural environments. This is achieved using a combination of 3D cameras and stereo vision, which provide depth perception and enable the robot to avoid obstacles and efficiently cover the field. The robot also utilizes a SparkFun motor and L298N motor driver to control its movement, allowing it to perform precise maneuvers.

In addition to navigation, the robot is equipped with specialized tools for task execution. For weeding, a brush cutter is mounted on the bottom of the robot, which is activated to remove unwanted vegetation. For pollination, the robot can be fitted with a blower mechanism that facilitates the transfer of pollen from flower to flower, a critical process for fruit and seed production. The robot also plays a crucial role in crop health monitoring, using the Intel RealSense camera to capture high-resolution images of the crops. These images are then processed using machine learning models to detect signs of disease, nutrient deficiencies, or other issues that may affect crop yield.

The robot is monitored and controlled via a React-based web application, which provides real-time feedback from the ESP32-CAM and other sensors. This user-friendly interface allows farmers and operators to easily oversee the robot's operations, make adjustments, and receive alerts about potential issues in the field.

Overall, the multifunctional agricultural robot represents a significant advancement in the automation of farming tasks. By integrating cutting-edge technologies, the robot not only reduces the need for manual labor but also enhances the precision and efficiency of agricultural practices. This innovation has the potential to revolutionize the agricultural industry, making farming more sustainable, productive, and efficient.

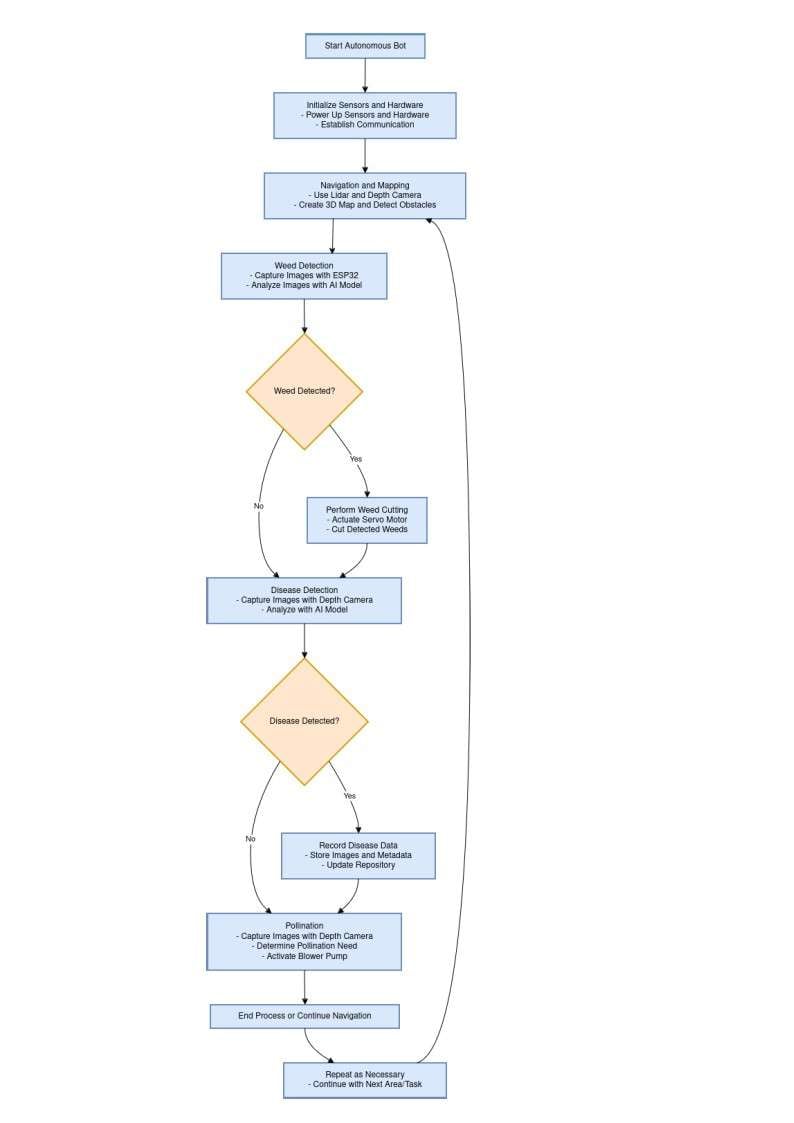

Work Flow

System Architecture

The system architecture of the multifunctional agricultural robot is designed to integrate various hardware and software components, enabling it to perform complex agricultural tasks autonomously. The architecture is modular, allowing for flexibility in adding or modifying components as needed. This section provides a detailed overview of the system architecture, highlighting the key elements and their interactions.

Central Processing Unit (Jetson Nano)

The NVIDIA Jetson Nano serves as the central processing unit (CPU) of the robot, responsible for high-level decision-making and computational tasks. It is equipped with a quad-core ARM Cortex-A57 CPU and 128 CUDA cores, providing sufficient computational power for real-time image processing, machine learning inference, and sensor data fusion.

- Operating System: The Jetson Nano runs Ubuntu with the Robot Operating System (ROS) middleware, which provides a framework for handling communication between hardware components and managing software nodes.

- Machine Learning: The Jetson Nano is capable of running machine learning models locally, enabling tasks such as weed identification, crop health analysis, and navigation.

Microcontroller (ESP32)

The ESP32 microcontroller acts as a secondary processor, responsible for low-level control tasks and interfacing with various sensors and actuators.

- Control: The ESP32 manages the motor drivers, sensors, and other peripherals, ensuring real-time responsiveness for tasks like motor control and data acquisition.

- Communication : It communicates with the Jetson Nano via serial or Wi-Fi, providing data from sensors and receiving commands for actuating motors and other components.

Sensors and Cameras

The robot is equipped with several sensors and cameras to gather environmental data and provide the necessary input for navigation and task execution.

- Intel RealSense Camera: Positioned at the top of the robot, this stereo camera provides 3D depth perception. It captures high-resolution color and depth images, which are used for crop health monitoring and obstacle detection.

- Data Processing: The captured data is processed on the Jetson Nano, where machine learning models analyze the images to identify plant health issues.

- ESP32-CAM: This camera module, mounted at the bottom of the robot, streams live video feed for real-time monitoring. The feed is accessible through the React App, allowing operators to observe the robot's environment.

- Applications: It is particularly useful for tasks that require close-up views, such as weeding and pollination.

Actuators and Motor Drivers

The robot's movement and task-specific actions are controlled by a set of actuators and motor drivers.

- Motors (SparkFun): The robot uses DC motors with encoders for precise control. The motors are responsible for locomotion, allowing the robot to navigate through fields.

- Encoders These provide feedback on the motor's position and speed, enabling accurate control of the robot's movements.

- L298N Motor Driver: This dual H-bridge motor driver controls the power supplied to the motors, allowing for direction and speed control.

- Control Signals: The ESP32 sends control signals to the L298N driver, which then adjusts the motor outputs accordingly.

Power Supply

The robot is powered by a rechargeable battery system, capable of providing the necessary voltage and current to all components.

- Battery Management: A battery management system (BMS) ensures safe operation, monitoring the state of charge and protecting against overcharging or deep discharge.

- Voltage Regulation: Voltage regulators are used to supply stable power to the Jetson Nano, ESP32, and other peripherals, ensuring consistent operation.

Software Components

The software architecture is built on the ROS framework, with additional custom software components developed for specific tasks.

- ROS Nodes: Each hardware component and functionality is encapsulated in a ROS node. For example, there are separate nodes for motor control, camera data processing, and sensor data acquisition.

- Inter-Node Communication: ROS topics and services facilitate communication between nodes, allowing data to be published and subscribed to across the system.

- Machine Learning Models: Deployed on the Jetson Nano, these models areCrop Health Monitoring and Analysis Using Intel RealSense Camera and React Application trained for specific tasks such as identifying plant health issues and detecting weeds.

- React App: A web-based application that serves as the user interface. It provides real-time telemetry data, video feeds, and control options for the robot.

- Backend: The backend services communicate with the Jetson Nano and ESP32 to fetch data and send commands.

Communication and Networking

The robot uses Wi-Fi for communication between the ESP32, Jetson Nano, and external devices like a control laptop or mobile device.

- Local Network: A local Wi-Fi network enables communication between the robot and the React App, providing real-time data access and control.

- Remote Access: The system can be configured to allow remote monitoring and control over the internet, enabling operators to manage the robot from distant locations.

Task Execution Modules

- Weeding Module: Includes the brush cutter mechanism controlled by the ESP32, which activates based on the detection of weeds.

- Pollination Module: A specialized tool -blower mechanism for pollination, which can be controlled and monitored through the React App.

The system architecture of the multifunctional agricultural robot is a carefully integrated assembly of hardware and software components, each playing a crucial role in the robot's functionality. The Jetson Nano acts as the brain of the system, handling complex computations and machine learning tasks, while the ESP32 manages real-time control of motors and sensors. The combination of advanced sensors, robust software, and reliable communication systems ensures that the robot can autonomously navigate, perform essential agricultural tasks, and provide valuable data for crop management. This architecture not only enhances operational efficiency but also provides a platform for future enhancements and scalability.

Integration and Implementation

The integration and implementation phase of the multifunctional agricultural robot involves assembling the hardware components, configuring the software systems, and ensuring that all elements work seamlessly together. This comprehensive process includes mechanical assembly, electrical wiring, software installation, and system calibration. The goal is to create a fully functional robot capable of performing tasks such as weeding, pollination, and crop health monitoring. This report details the steps involved in each aspect of the integration and implementation process.

1. Hardware Assembly

The hardware assembly phase involves the physical construction of the robot, including the chassis, mounting of components, and wiring.Crop Health Monitoring and Analysis Using Intel RealSense Camera and React Application

3D Dessign

a. Chassis and Frame Construction

- Design: The robot's chassis is designed to provide stability and support for all components. It is constructed using durable materials such as aluminum or steel to withstand rough agricultural environments.

- Assembly: The frame is assembled according to the design specifications. Key components such as the motors, wheels, and sensors are mounted onto the chassis. The layout is carefully planned to ensure balanced weight distribution and easy access for maintenance.

b. Motor and Actuator Installation

- Motors: The SparkFun DC motors with encoders are mounted at designated positions on the chassis. The motors are securely fastened to prevent movement during operation.

- Motor Driver: The L298N motor driver is installed and connected to the motors. The driver is responsible for controlling the motors' speed and direction based on signals from the ESP32 microcontroller.

c. Sensor and Camera Setup

- Intel RealSense Camera: The camera is mounted on the top of the robot, positioned to capture a clear view of the crops and surroundings. The camera's angle and height are adjusted for optimal depth perception and image capture.

- ESP32-CAM: This camera is positioned at the bottom of the robot to provide real-time video feedback of the ground area. It is mounted in a protective housing to prevent damage from debris.

d. Brush Cutter and Pollination Mechanism

- Brush Cutter: The brush cutter mechanism is installed at the bottom of the robot. It is connected to a motor that drives the cutting blade. The mechanism is designed to activate when weeds are detected.

- Pollination Mechanism: A specialized tool for pollination, such as a robotic arm or air blower, is integrated into the system. This mechanism can be controlled and adjusted as needed.

e. Wiring and Electrical Connection

- Power Supply: The robot's battery system is connected to the power distribution board. Voltage regulators are used to ensure a stable power supply to all components, including the Jetson Nano, ESP32, motors, and sensors.

- Signal Wiring: Wires are carefully routed to connect sensors, actuators, and controllers. Proper insulation and shielding are used to prevent interference and ensure signal integrity.

2. Software Setup

The software setup phase involves installing and configuring the operating systems, drivers, and application software on the Jetson Nano and ESP32.

a. Operating System and ROS Installation

- Jetson Nano: The Ubuntu operating system is installed on the Jetson Nano. ROS (Robot Operating System) is then set up as the middleware to manage communication between software nodes and hardware components.

- ROS Nodes: Nodes for motor control, sensor data processing, and camera feeds are created and configured. These nodes communicate via ROS topics and services.

- ESP32: The ESP32 is programmed with custom firmware that handles motor control, sensor data acquisition, and communication with the Jetson Nano.

b. Machine Learning Model Deployment

- Model Training: Machine learning models for tasks such as weed identification and crop health analysis are trained using labeled datasets. The models are then optimized for deployment on the Jetson Nano.

- Model Deployment: The trained models are deployed on the Jetson Nano, where they run inference in real- time on the captured image data.

- React App Development

- Frontend: The React App is developed to provide a user-friendly interface for monitoring and controlling the robot. It includes features like live video feeds, telemetry data, and control options.

- Backend: The backend services communicate with the Jetson Nano and ESP32, fetching data and sending commands. WebSockets or REST APIs are used for real-time communication.

3. System Calibration

System calibration ensures that all components operate correctly and in harmony. This step involves fine-tuning the hardware and software to achieve optimal performance.

a. Sensor Calibration

- Camera Calibration: The Intel RealSense camera and ESP32-CAM are calibrated for accurate depth perception and image alignment. Intrinsic and extrinsic parameters are adjusted to eliminate distortions and ensure precise data capture.

- Encoder Calibration: The encoders on the motors are calibrated to accurately measure wheel rotations and calculate the robot's position and speed.

b. Actuator Calibration

- Motor Control: The motor control algorithms are fine-tuned to ensure smooth and precise movement. Parameters such as speed, acceleration, and braking are adjusted for optimal performance.

- Brush Cutter and Pollination Mechanism: The activation and control of the brush cutter and pollination mechanism are calibrated to ensure efficient operation.

c. System Integration Testing

- Component Testing: Each component is tested individually to verify its functionality. Sensors, cameras, motors, and other peripherals are checked for proper operation.

- Subsystem Testing: The robot's subsystems (navigation, task execution, and data processing) are tested in isolation to identify and resolve any issues.

- Full System Testing: The entire system is tested as a whole to ensure all components work together seamlessly. Integration testing includes navigating the robot, performing weeding and pollination tasks, and monitoring crop health.

4. Field Testing and Validation

The final step involves testing the robot in real-world agricultural environments to validate its performance.

a. Field Trials

- Navigation: The robot is tested for its ability to autonomously navigate through fields, avoid obstacles, and follow planned paths.

- Weeding and Pollination: The effectiveness of the brush cutter and pollination mechanism is evaluated in actual field conditions.

- Crop Health Monitoring: The accuracy of the crop health monitoring system is assessed by comparing the robot's data with manual observations.

b. Performance Metrics

- Efficiency: The robot's efficiency in covering the field and performing tasks is measured. Metrics include time taken, area covered, and energy consumption.

- Accuracy: The accuracy of the robot's navigation, task execution, and data analysis is evaluated. Metrics include error rates, precision, and recall.

- Reliability: The system's reliability is tested by running it over extended periods and under different environmental conditions.

c. User Feedback and Iteration

- User Feedback: Feedback from farmers and operators is collected to understand the robot's usability and effectiveness. Suggestions for improvement are noted.

- Iteration: Based on the feedback and test results, iterative improvements are made to the hardware and software. This may involve refining algorithms, enhancing the user interface, or upgrading components.

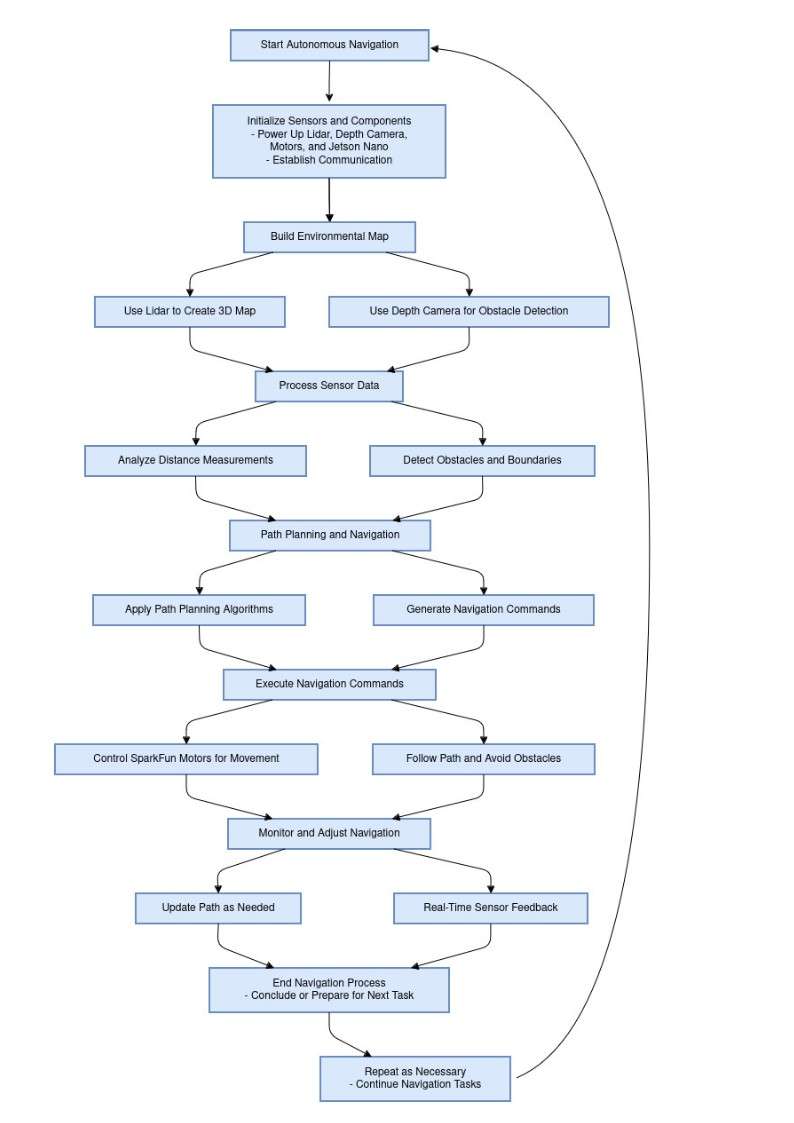

Autonomous Navigation

Autonomous navigation in the multifunctional agricultural robot, powered by the Robot Operating System (ROS), is a comprehensive system that integrates various components to enable the robot to operate independently in agricultural environments. The architecture includes mapping, localization, path planning, and control modules, each playing a crucial role in the robot's functionality. The mapping process utilizes tools like gmapping, RTAB-Map, and Cartographer to create 2D or 3D maps of the environment using data from sensors such as cameras and LiDAR. Simultaneous Localization and Mapping (SLAM) is employed to build and maintain these maps while determining the robot's position within them. For localization, methods like AMCL and Visual SLAM are used to esCrop Health Monitoring and Analysis Using Intel RealSense Camera and React Applicationtimate the robot's pose accurately. Path planning, a key aspect of navigation, involves global planners like navfn and local planners such as DWA and Teb Local Planner.

These planners generate safe and efficient paths from the robot's current position to a target location while avoiding obstacles. The control system, managed through ROS Control and Controller Manager, executes these plans by sending precise commands to the robot's actuators, ensuring smooth and accurate movements. Obstacle detection and avoidance are facilitated through a layered costmap and sensor fusion, integrating data from multiple sensors to create a detailed understanding of the environment.

Testing and debugging are essential parts of the development process, with tools like Gazebo for simulation, RViz for visualization, and ROS logging capabilities for data analysis and optimization. Together, these components enable the robot to perform tasks such as weeding and pollination autonomously, making it an invaluable tool in modern agriculture.

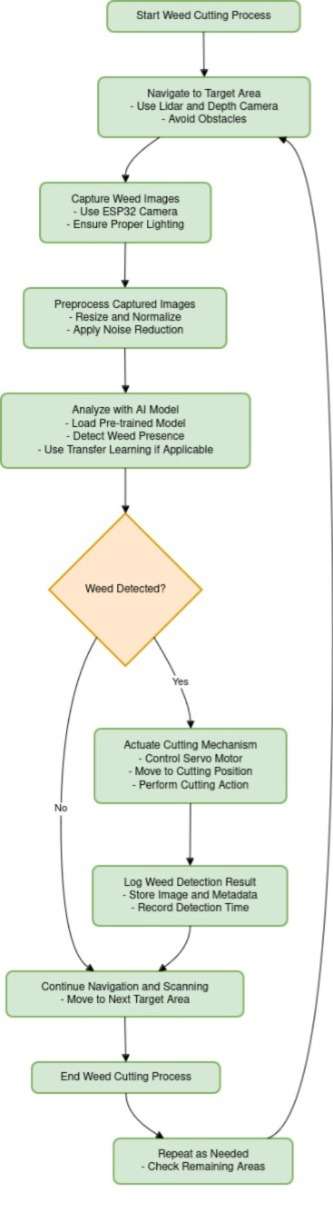

Task Execution: Weeding and Pollination

The multifunctional agricultural robot is designed to perform two primary tasks: weeding and pollination. These tasks are crucial for maintaining crop health and enhancing yield. The robot employs advanced sensors, actuators, and control systems to execute these tasks autonomously and efficiently. This report provides a detailed explanation of the methods, components, and processes involved in the execution of weeding and pollination tasks.

1. Weeding

Weeding is the process of removing unwanted plants (weeds) that compete with crops for nutrients, water, and sunlight. The robot is equipped with specialized tools and sensors to identify and eliminate weeds without damaging the crops.

a. Weed Detection

Weed detection is the first step in the weeding process. It involves identifying the location and type of weeds present in the field.

- Sensors and Cameras: The robot uses a combination of sensors, such as RGB cameras and depth sensors (e.g., Intel RealSense), to capture images of the field. The ESP32-CAM, positioned at the bottom of the robot, provides real-time video feedback, focusing on the ground area.

- Image Processing and Machine Learning: Advanced image processing techniques and machine learning algorithms are employed to distinguish weeds from crops. The algorithms are trained on a dataset containing labeled images of crops and weeds. Techniques like Convolutional Neural Networks (CNNs) are used for classification, while segmentation algorithms help in identifying the exact location and boundaries of the weeds.

- Decision Making: Once the weeds are detected, the system decides whether they need to be removed based on factors like size, proximity to crops, and type of weed.

b. Weed Removal Mechanism

After detecting the weeds, the robot activates its weed removal mechanism to eliminate them.

- Mechanical Weeding Tool: The primary tool for weed removal is a brush cutter mechanism. It consists of rotating blades powered by a motor, capable of cutting through the weeds. The mechanism is mounted on the bottom of the robot and can be adjusted in height to target weeds of different sizes.

- Activation and Control: The robot's control system manages the activation of the brush cutter. It uses the weed detection data to position the cutter accurately over the weeds. The control system ensures that the blades engage only when weeds are present, minimizing the risk of damaging crops.

- Safety Measures: To prevent damage to the crops, the robot uses additional sensors (e.g., proximity sensors) to detect the presence of crops and adjust the cutter's position accordingly. The system also incorporates emergency stop functions to halt the mechanism if an unexpected object is detected.

c. Efficiency and Optimization

The efficiency of the weeding process is critical for large-scale agricultural operations.

- Path Planning for Weeding: The robot uses a specialized path-planning algorithm to cover the entire field systematically. The path is planned to maximize coverage and minimize time, ensuring that all areas are checked for weeds.

- Adaptive Weeding: The robot can adapt its weeding strategy based on the density of weeds and the type of crops. For example, in areas with high weed density, the robot may employ a more aggressive weeding approach, while in sensitive crop areas, it uses a gentler approach.

2. Pollination

Pollination is the process of transferring pollen from the male parts of a flower to the female parts, enabling fertilization. In some crops, especially in controlled environments like greenhouses, artificial pollination is necessary to ensure fruit production.

a. Pollination Detection

The first step in artificial pollination is identifying flowers that require pollination.

- Flower Detection: Similar to weed detection, the robot uses cameras and image processing algorithms to identify flowers. The system distinguishes between flowers that are ready for pollination and those that are not. Key characteristics such as flower color, size, and shape are analyzed.

- Data Analysis: The robot can store and analyze data about the flowering stage of the plants, helping to determine the optimal time for pollination.

b. Pollination Mechanism

The robot uses a specialized mechanism to transfer pollen from one flower to another.

- Robotic Arm: The pollination tool is often attached to a robotic arm, providing the flexibility to reach flowers at different heights and angles. The arm's movements are precisely controlled to avoid damaging the flowers.

- Pollination Tools: The tools used for pollination can vary depending on the type of crop. Common tools include:

- Air Blowers: Air blowers can disperse pollen over a wider area, suitable for crops with many flowers.

- Control and Precision: The robot's control system carefully coordinates the movements of the robotic arm and the activation of the pollination tool. The system ensures that the pollen is transferred accurately, maximizing the chances of successful fertilization.

c. Pollination Optimization

Efficient pollination is crucial for maximizing crop yield.

- Selective Pollination: The robot can perform selective pollination, targeting specific flowers or areas based on factors like flower maturity and expected fruit quality.

- Pollination Scheduling: The robot can be programmed with a pollination schedule, optimizing the process based on the growth cycle of the plants and environmental conditions.

3. Integration and Coordination

The execution of weeding and pollination tasks requires seamless integration of hardware and software components.

- Task Coordination: The robot's onboard computer (e.g., Jetson Nano) coordinates the execution of weeding and pollination tasks. It prioritizes tasks based on the current field conditions and the robot's position.

- Multi-Tasking: The robot is capable of performing multiple tasks simultaneously, such as weeding while navigating to a new location for pollination. The control system ensures smooth transitions between tasks and prevents conflicts.

- User Interface: The robot's operation can be monitored and controlled through a React-based application. The user interface provides real-time video feeds, task status, and control options, allowing the operator to intervene if necessary.

4. Testing and Validation

The weeding and pollination mechanisms are tested and validated in controlled environments and actual fields.

- Controlled Testing: Initial testing is done in controlled environments, such as greenhouses, where variables can be controlled. This phase helps in fine-tuning the detection and execution mechanisms.

- Field Testing: The robot is tested in real-world agricultural settings to evaluate its performance under different conditions. Field testing helps in assessing the robot's efficiency, accuracy, and reliability in performing weeding and pollination tasks.

- Performance Metrics: Key performance metrics include the accuracy of weed and flower detection, the success rate of weed removal and pollination, the time taken for each task, and the overall impact on crop yield.

The multifunctional agricultural robot leverages advanced technologies to autonomously execute weeding and pollination tasks. Through the integration of sophisticated sensors, machine learning algorithms, and precise mechanical tools, the robot can effectively identify and eliminate weeds and perform artificial pollination. The system's design ensures efficient task execution, adaptability to various crop types, and user-friendly operation. Rigorous testing and validation further enhance the robot's reliability and effectiveness, making it a valuable asset in modern agriculture.

Crop Health Monitoring and Analysis Using Intel RealSense Camera and React Application

The multifunctional agricultural robot is equipped with an Intel RealSense camera to monitor crop health and identify potential issues such as diseases and readiness for pollination. The camera captures high-resolution images and depth data, which are processed using computer vision algorithms. A React application provides a user-friendly interface for monitoring real-time data, analyzing crop health, and offering actionable insights. This report details the hardware and software components involved, the data processing pipeline, and the functionalities of the React application.1. Intel RealSense Camera and Data Acquisition

image widget

The Intel RealSense camera is a key component in the robot's crop health monitoring system. It captures both RGB images and depth data, providing a rich dataset for analysis.

a. Camera Specifications and Setup

- Model: Intel RealSense D455

- Features: The camera features a high-resolution RGB sensor and a stereo depth sensor, allowing it to capture color images and depth information simultaneously.

- Mounting: The camera is mounted on the top of the robot, positioned to capture a wide field of view and focus on the crop canopy.

- Data Capture: The camera continuously captures data as the robot navigates the field, recording RGB images and depth maps at specified intervals.

b. Data Acquisition

- Image Capture: The RGB sensor captures high-quality images of the crops, including details such as leaf color, texture, and overall appearance.

- Depth Data: The stereo depth sensor measures the distance to various points in the scene, creating a 3D representation of the crop canopy. This data helps in assessing plant height, density, and spatial arrangement.

2. Computer Vision and Machine Learning Algorithms

The captured data is processed using advanced computer vision and machine learning algorithms to monitor crop health, identify diseases, and assess readiness for pollination.

a. Preprocessing

- Image Enhancement: Techniques such as histogram equalization and noise reduction are applied to enhance image quality and highlight relevant features.

- Segmentation: The images are segmented to separate individual plants from the background. Segmentation algorithms like U-Net or Mask R-CNN can be used to accurately delineate plant boundaries.

b. Disease Detection and Classification

- Feature Extraction: Key features, such as color, texture, and shape, are extracted from the segmented images. These features are crucial for identifying disease symptoms, such as discoloration, lesions, or abnormal growth patterns.

- Model Training: A convolutional neural network (CNN) or a similar deep learning model is trained on a labeled dataset containing images of healthy and diseased plants. The model learns to classify images based on the presence and type of disease.

- Disease Identification: During operation, the model analyzes the captured images, classifying them into different disease categories. For example, it can identify common diseases like powdery mildew, leaf spot, or blight.

c. Health Analysis and Readiness for Pollination

- Health Metrics: The system calculates various health metrics, such as leaf area index, chlorophyll content estimation (using spectral analysis if available), and overall plant vigor.

- Pollination Readiness: The algorithm assesses the flowering stage of the plants. By detecting flower buds and blooming stages, it determines whether the crops are ready for pollination.

d. Field Affected Area Calculation

- Disease Spread Analysis: The system calculates the percentage of the field affected by diseases by analyzing the proportion of diseased plants or plant parts. This involves counting the number of plants identified as diseased and comparing it to the total number of plants.

- Geospatial Mapping: Using depth data, the system can create a 3D map of the field, overlaying disease information. This helps in visualizing the spatial distribution of affected areas.

3. React Application for Monitoring and Analysis

The React application serves as the user interface for monitoring real-time data from the Intel RealSense camera and providing insights into crop health. It integrates various features to offer a comprehensive analysis of the field.

a. User Interface and Dashboard

- Real-Time Data Display: The application displays real-time video feeds from the camera, along with overlays of detected diseases and affected areas.

- Field Overview: A map view shows the field layout, highlighting areas with different crops and indicating the severity of diseases in various sections.

b. Crop and Disease Information

- Crop Type Identification: The application identifies and displays the types of crops present in the field. This information is crucial for tailoring disease detection algorithms and providing accurate solutions.

- Disease Classification and Solutions: For each detected disease, the application provides a detailed description, including symptoms, potential causes, and recommended treatment options. This information helps farmers take timely and appropriate action.

c. Pollination Readiness Indication

- Flower Detection: The application indicates which crops are ready for pollination based on flower detection data. This feature is critical for planning pollination tasks, especially in controlled environments like greenhouses.

- Alerts and Notifications: The system can send alerts when a significant portion of the field is ready for pollination or when critical diseases are detected. This ensures timely intervention and efficient management.

d. Data Analytics and Reporting

- Health Metrics Visualization: The application visualizes health metrics over time, allowing users to track changes and trends in crop health. Graphs and charts display parameters like disease spread, plant growth, and overall field health.

- Custom Reports: Users can generate custom reports based on the collected data, including disease incidence, crop health status, and pollination readiness. These reports are valuable for farm management and decision-making.

e. User Interaction and Controls

- Manual Control: The application allows users to manually control the robot's movement and tasks, providing flexibility in operations.

- Data Annotation: Users can annotate data, such as marking specific areas of interest or noting observations. This feature is useful for training machine learning models and improving system accuracy.

The multifunctional agricultural robot, equipped with an Intel RealSense camera and controlled via a React application, offers a sophisticated solution for monitoring crop health and managing agricultural tasks. The system leverages advanced computer vision and machine learning techniques to detect diseases, assess crop health, and determine pollination readiness. The React application provides a comprehensive and intuitive interface for real-time monitoring, data analysis, and actionable insights. This integrated approach enhances the efficiency and effectiveness of farm management, helping to ensure healthy crops and optimal yields.